In this post, University of Northern British Columbia instructor and 2020–2021 BCcampus Research Fellow Meghan Costello shares her experience using a non-traditional assessment for her online first-year physics courses and the resulting influence on student learning.

In a traditional first-year physics course, the proctored midterms and final exam are key components of the course structure. Interactive, engaging lectures along with assignment questions that consist of relevant problems certainly make the course more meaningful for students. However, as much as they may enjoy these other course components, many students believe the key purpose of these elements is to help prepare them for the final exam. Unfortunately, an increasing problem with this course structure is that the solution to every traditional first-year physics problem is available online (sites like Chegg and Slader are just two examples). This has resulted in increasing numbers of students who, due to the use of online homework “help,” develop a false sense of how well they understand the course material (e.g. the student who turns in nearly perfect assignments all semester and then shows up to end-of-semester office hours having only a foggy idea as to what the variables represent). In the traditional physics course structure, the motivation for students to understand the course material is largely upheld by the proctored exams. With the pivot to online learning (and unless a department is willing to employ invasive online-proctoring services), the possibility of proctored exams has been removed.

With the current norm of fully online course delivery, how can we restructure first-year physics courses so the use of non-traditional assessment techniques does not further contribute to this problem—and, ideally, helps provide a solution? One might imagine the solution is for instructors to make up all midterm and final exam questions themselves. Although this is certainly a good idea, unfortunately, any student who wishes to circumvent the academic integrity process can simply upload the questions to a “tutoring” website, and solutions will pop out in as little as 20 minutes. I believe the solution starts with restructuring physics questions in a way that requires increased student engagement and cannot be answered by a student simply looking up the calculation steps on the internet.

My BCcampus Research Fellows project is titled Effectively Moving Away from Traditional Proctored Exams in First-Year Physics Courses. Since summer 2020, I have taught four online first-year physics courses in which students were required to explain some assignment and exam questions via recorded video.

How It Works

Early in the course (specifically in question 1 on assignment 1), I ask students to upload a short video introducing themselves to the class. They are free to share whatever information they wish in this video. The purpose of the introduction videos is not only to build community but also to have the students learn the process of recording and uploading a video to the learning management system. Although I post an instruction video to demonstrate the video-upload process, there are invariably some technical issues for students to work out (e.g., certain devices can upload video only using certain web browsers). It is best to sort out these issues early in the course, before students become too busy with actual coursework.

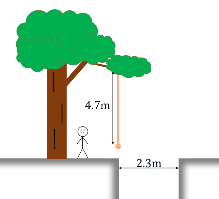

Then, throughout the course, I have students explain occasional assignment questions via recorded video, and I include one video-explanation question on each midterm and the final exam. The types of questions I assign for video explanation are not typical calculation problems out of a physics textbook. As an example, considering the diagram to the right, a typical textbook question would be: How fast must this person be running in order to grab the rope and successfully swing across the ravine?

In my video-explanation questions, I require a thorough explanation of the steps behind the calculation. For this specific question I asked the students to do the following:

- List the forces that act on the person, and classify them as conservative or non-conservative.

- Explain whether mechanical energy is conserved based on a specific criteria I provide.

- Show how trigonometry can be used to calculate the relevant distances.

- Find the minimum speed the person must be running to make it across the ravine.

For some other video-explanation questions, I have had the students do short projects, for example determining the coefficient of static friction between their calculator and textbook.

In my experience, having seen my midterm questions posted on sites such as Chegg by students in the past, the online tutors do not generally provide detailed explanation of background theory behind a problem. As a result students cannot adequately explain the video-submission questions by simply reading through a written calculation provided by a tutoring website.

Results

Although the primary motivation behind this project was to encourage academic integrity, I have found that having to explain questions via video has had positive effects on student engagement and concept mastery.

The level of student engagement demonstrated in the videos is encouraging. Some students go above and beyond to create props that explain the concepts (e.g., for a video involving a fluid flow equation, using a container of water with holes drilled at the appropriate heights). In the coefficient of static friction project mentioned above, students reported they felt like they were doing real physics. Several students commented that, looking back, they remember the concepts from the video questions much better than the regular (i.e., written) assignment and midterm questions.

Comparing the level of understanding students typically demonstrate in their videos to typical office hours conversations with students pre-pandemic, I find that students’ ability to explain the reasoning behind their calculations is noticeably improved. The process of having to verbally explain their steps helps students identify where they have misunderstandings of the course concepts. A number of students mentioned they often discover conceptual errors in the process of recording their videos (e.g., “This is take three of my video, and I think I finally have it right this time”). There are also students who, rather than rerecording, simply identify and correct their errors in the process of creating their videos. It is interesting to observe this learning process take place.

I allow very flexible time lines for the submission of the video recordings. Although the regular written part of a midterm is due within an hour, I generally allow students an eight- to 12-hour window to submit a recorded video. This is necessary due to issues such as poor internet connection, some students having a job or another class to attend directly after the midterm, etc. This flexible time line has allowed the students who need a little more time to mull things over a better opportunity to demonstrate their learning. A number of students mentioned they appreciate the fact that, even if they mess up things in the timed portion of the midterm, there is an opportunity to redeem themselves in their video submission.

Has This Prevented Academic Misconduct?

First, I believe communicating to students on day one of the class that they are expected to regularly appear on video to explain concepts helps deter cheating. Students lead busy and stressful lives, and it is helpful to establish from the start that they will have to properly learn the material to do well in the class.

Has this prevented all attempts at cheating? Particularly in non-majors courses, it has not. However, I believe it has helped make things more fair. For example, I require students to hand in a PDF of their written calculations along with their video explanations. In a number of cases, a student’s written calculations were perfect (e.g. 6/6), but upon watching the corresponding video, it became apparent that the student had no idea what they were talking about and wasn’t even sure what their variables represented. Taking the video explanation into account, a student’s mark may become 1/6, which is a much more appropriate grade for a solution the student clearly did not come up with themselves.

Concluding Thoughts

One of the questions I am often asked about the project is how much extra time is required to mark the videos. Generally in a small class of, say, 13 students, each set of video submissions takes just over an hour to mark. Given the benefits in terms of both student understanding and allowing me to better get to know my students, I consider that to be worth the extra time. In larger classes video marking is more time consuming. This can be managed somewhat by giving students a suggested time frame for their videos (e.g., roughly four minutes). I currently have a TA in each of the larger classes specifically to help with marking the video questions, and I appreciate their assistance!

In our current online delivery format, I consider the video-submission questions to be worth the extra time. Having taught first-year physics for many years, I am well aware of how hard students work to succeed in these courses. It is important the course marks reflect this hard work and are not diminished by a small group of other students also achieving excellent marks by simply copying solutions off the internet. I also appreciate how video submissions have allowed an opportunity for students who are not typically “good” test writers to accurately demonstrate their knowledge.